How secure a web application should be? Well, for many of us, web-developers, the question doesn't make much sense. "An application must be as secure, as it is possible. The more secure it is, the better". But it is not a definite answer. It doesn't help to form a security policy of a project. Moreover, sticking to just this single directive ("The more secure it is, the better") may prove to be an ill service. Why? That's what I'm going to discuss in this article.

Security often makes usability worse

Excessive security checks certainly make an application more annoying. Mostly it's true for 2 parts of an application: authentication and forgotten password functionality.

Multistage authentication that includes SMS verification and additional protective fields, apart from password, makes a user experience a little more secure, but less enjoyable. And the user certainly won't appreciate your attempts to make his experience more secure, if all your service does is allowing to exchange funny pictures with other users.

Best security practices advise to show as little info as possible in cases of authentication errors, to prevent an intruder from collecting a list of users. According to this advice, if a user went through 33 stages of authentication and made a typo in one field, the best solution would be to show a message like: "Sorry, something went wrong. Please, try again". Gratitude to developers and sincere admiration for their efforts to make a user experience as safe as possible are the emotions that the user is unlikely to experience in that case.

You must fully realize, in which case a user experience gets worse, and decide if this is acceptable in your specific situation.

Security makes applications harder to develop and support

The more defense mechanisms an application has, the more complicated it is. Time required for creating some parts of the application might increase by several times to include a minor security improvement.

Much effort can be spent just on making a life of intruders more frustrating, and not on fixing actual security problems. For example, the project may choose to obfuscate method names and parameters names in its REST API.

Frequently, developers spend much time to prevent an intruder from harvesting a list of usernames through a login form, a registration form and a forgotten password form.

There are approaches, when an app marks a user as an intruder, but doesn't reveal it. All user requests will be simply ignored.

If a multistage authentication process includes a secret question, that is unique for every user, then we still can show a question for a username that doesn't exist in our entries. Moreover, the application can store in a session or in a db this username and the shown question to consistently ask for the same information.

There are plenty of other ways how to confuse an intruder. But surely they all require time to be implemented. And this logic might be quite intricate even for its authors, even if it's written well and has commentaries. But the most important thing is that it doesn't actually fix any security issue, it just prevents from finding such issues.

It's not always that simple to separate "A well-designed and truly safe functionality" from "Wild mind games with an imaginary hacker". Especially because a fine edge between these two extremes is not absolute and greatly depend on how much your application is attractive to potential hackers.

Security makes applications harder to test

All our security logic must be tested. Unit tests, integration tests or manual testing - we should choose an appropriate approach for every single security mechanism we have.

We can't just give up testing our defense logic, because bugs tend to appear in our work. And even if we were able to write everything correctly in the first place, there is always a chance that bugs will be added during maintenance, support and refactoring. Nobody starts a project by writing a legacy code. The code becomes legacy over time.

It's not sensible to thoroughly test all business logic, but at the same time to assume that our security mechanisms are perfect, absolute and error-free.

If security logic will be tested manually, then there is a question of how often must it be done. If our application is more or less complicated, then there can be dozens, if not hundreds, places where broken authentication can be. For instance, if in some request some ID parameter is changed, the server returns an information that must not be accessible to us. Checking every similar possible case is a lot of work. Should we check it before every major release? Should we assign an individual person for this task? Or should we even have a whole team for this?

These questions are important. Broken authentication can be easily introduced into the project. We must be vigilant while making any tiny change in our model and adding new REST method. There is no simple and universal answer to this problem. But there are approaches that allow dealing with the problem consistently throughout a project. For instance, we, in CUBA platform, use roles and access groups. They allow configuring which entities are accessible to which users. There is still some work to configure these rules, but the rules themselves are uniform and consistent.

Apart from broken authentication there are dozens of security problems that should be tested. And implementing a new mechanism or logic, we must consider how it will be tested. Things that are not tested tend to break over time. And we get not only problems with our security, but also a false confidence that everything is ok.

There are 2 types of security mechanisms that cause the most trouble: mechanisms that work only on prod environment and mechanisms that represent a 2nd (3d, 4th) layer of security.

Defense mechanisms that work only on production. Let's assume that there is a session token cookie, which must have a "secure" flag. But if we use HTTP everywhere in our test environment that means that there are separated configurations for testing and production. And therefore we test not exactly the product that will be released. During migrations and various changes the "secure" flag can be lost. And we won't even notice that. How do we deal with the problem? Should we introduce one more environment that will be used as pre-production? If so, then what part of our functionality should be tested on this environment?

Multilayered defense mechanisms. People, experienced in security issues, tend to create a security logic that can be tested only when other security mechanisms are turned off. It actually makes sense. Even if an intruder manages to find a vulnerability in the first layer of our security barrier, he will be stuck on the second. But how it is supposed to be tested? A typical example of this approach is the use of different db users for different users of the app. Even if our REST API contains broken authentication, the hacker won't be able to edit or delete any info, because the db user doesn't have permissions for these actions. But evidently such configurations tend to outdate and break, if they are not maintained and tested properly.

Many security mechanisms make our applications less secure

The more defense checks we have, the more complicated an app is. The more complicated the app is, the higher probability of making a mistake. The higher probability of making a mistake, the less secure our application is.

Once again let's consider a login form. It's quite simple to implement login form with 2 fields: username and password. All we need to do is to check if there is a user in the system with a provided name and if a password is entered correctly. Well, it's also advisable to check that our application doesn't reveal in which field a mistake was made, to prevent an intruder from harvesting user names, although this practice can be sacrificed for some applications to make a more pleasant user experience. Anyway, we also have to implement some kind of brute-force defense mechanism. That, of course, should not contain a fail-open vulnerability. It's also a good idea not to reveal to the intruder that we know that he is an intruder. We can just ignore his requests. Let him think that he is continuing to hack us. Another thing to check is that we don't log user passwords. Well, actually there is another bunch of less important things to consider. All in all, a standard login form is a piece of cake, isn't it?

Multistage authentication is a completely different thing. Some kind of token can be sent to the e-mail address or via SMS. Or there can be several steps, involving entering more and more information. This all is quite complicated. In theory, this approach should diminish the possibility of a user account being hacked. And if the functionality is implemented properly, then it's the case. There is still a possibility to be hacked (nor SMS, nor e-mail message, nor anything else will give us 100% guarantee), but by these means it reduces. But the authentication logic that already was quite complex, becomes much more complicated. And the probability to make a mistake increases. And existence of a single bug will prove our new model to be less secure, than it was while it was just a simple form with 2 fields.

Moreover, intrusive and inconvenient security measures may force users to store their sensitive data less secure. For example, if in a corporate network there is requirement to change password monthly, then users, that don't understand such annoying measures, might start to write their passwords on stickers and put them on their screens. "It's totally a fault of users, if they commit such follies", you can object. Well, maybe. But it's definitely your problem too. At the end of the day, isn't the satisfaction of users' needs is our final goal as developers?

Got it. So what are you suggesting?

I suggest deciding from the start, how far are we ready to go to obstruct an intruder. Are we ready to optimize our login form so, that the response time on login requests won't reveal if a user with such a name exists or not? Are we ready to implement checks so reliable, that even a close friend of a victim sitting from his/her cellphone is not able to access an application? Are we ready to complicate development by several times, inflate the budget and sacrifice the good user experience for the sake of making a life of the intruder a little more miserable?

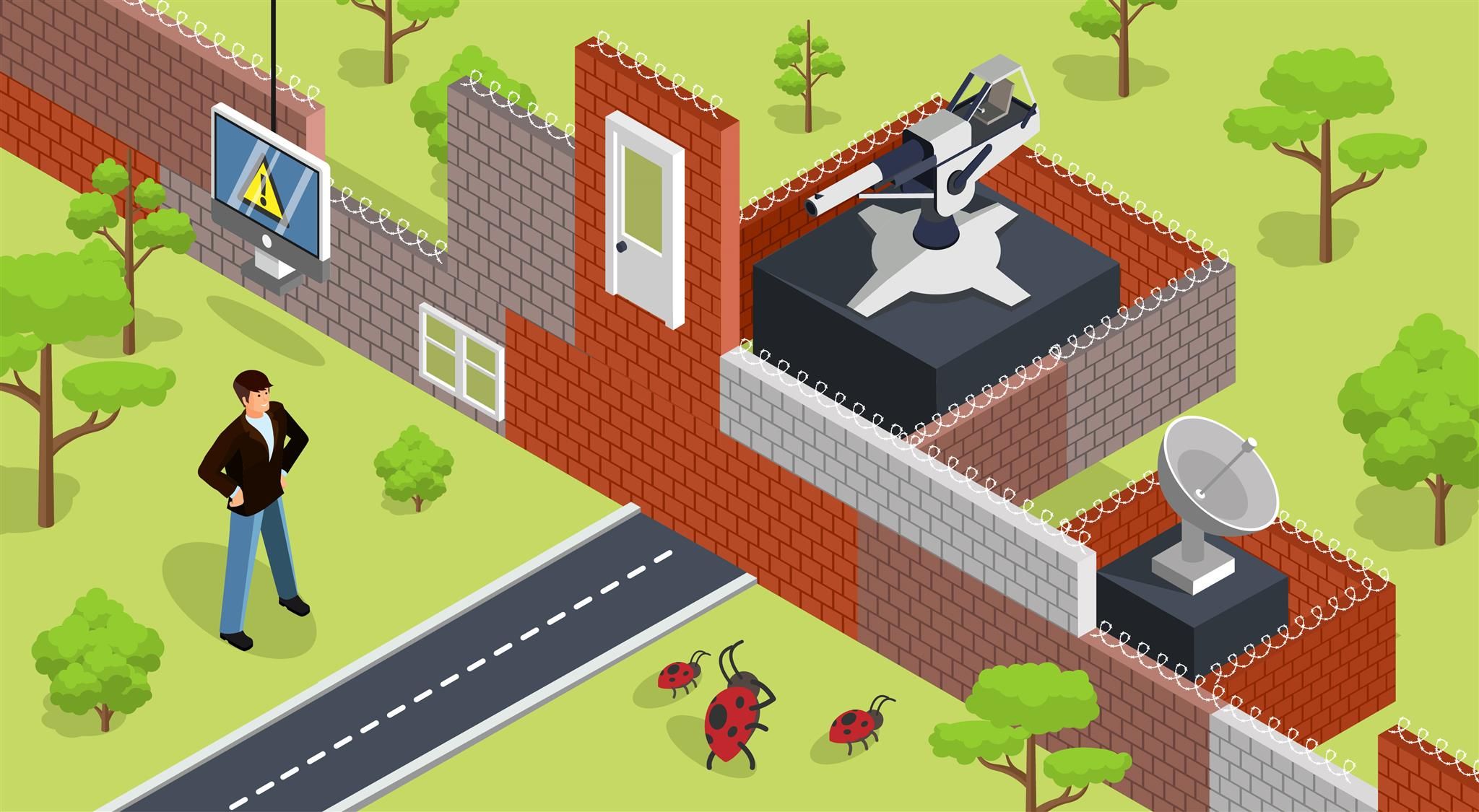

We can endlessly work on security, building new layers of protection, improving monitoring and user behavior analysis, impeding an obtaining of information. But we should draw a line that will separate things we must do from things we must not do. Certainly, during project evolution this line can be re-considered and moved.

In the worst case scenario, a project can spend a lot of resources on building an impenetrable defense against one type of attacks, while having an enormous security flaw in some other place.

When making a choice, if we are going to implement some security mechanism or if we are going to build another layer of security, we must consider many things:

- How easy is it to exploit a vulnerability? Broken authentication can be exploited easily. And it doesn't require any serious technical background for it. Therefore, the problem is important and should be dealt with accordingly.

- How critical is a vulnerability? If an intruder is able to obtain some sensitive information about other users or, even worse, can edit it, then it's a quite serious problem. If an intruder can collect ID-s of some products of our system and cannot use these ID-s for anything particular interesting, then the problem is much less severe.

- How much more secure will an application be if we implement this feature? If we are talking about additional layers of security (for instance, checking XSS problems on an output, when we already implemented a good mechanism for input sanitization), or we are just trying to make a life of an intruder harder (for example, we try to conceal the fact that we marked him as a hacker), then the priority of these changes in not high. Maybe they may be not implemented at all.

- How much will it take time?

- How much will it cost?

- How much worse will a user experience get?

- How difficult will it be to maintain and test the feature? A common practice is never to return 403 code on attempt to access a restricted resource, and always to return 404 code. This will make it harder to collect identifiers of resources. This solution, although makes it more difficult to gain information about the system, at the same time complicates testing, production error analysis. And it can even prove to be harmful to a user experience, because a user can get a confusing message that there is no such resource, although the resource exists, but for some reasons became inaccessible to the user.

Well, surely, in your specific case there may be a need in a multistage authentication mechanism. But you must fully understand in which ways it impedes the development and makes an application less enjoyable for users.

You are justifying a negligent approach towards security

Well, I am not. There are certainly security-sensitive applications, which will gain from additional security measures. Even if these measures increase expenses and destroy user experience.

And, of course, there are a number of vulnerabilities that should not appear in any application, no matter how small it is. CSRF is a typical example of such a vulnerability. Defending against it doesn't make user experience worse and doesn't cost a lot. Many server-side frameworks (such as Spring MVC) and front-end frameworks (such as Angular) allow supporting CSRF-tokens out-of-the-box. Furthermore, with Spring MVC we can quickly add any required security header: Access-Control-*header, Content-Security-Policy, etc.

Broken authentication, XSS, SQL injection and several other vulnerabilities are not permitted to have in our applications. Defense against them is easy to grasp and is perfectly explained in a great range of books and articles. We also can add to this list passing a sensitive info inside URL parameters, storing weakly hashed passwords and other bad security practices.

In the best possible way, there should be a manifest in a project, which describes a security policy of the project and answers such questions as:

- What security practices are we following?

- What is our password policy?

- What and how often do we test?

- etc.

This manifesto will be different for different projects. If a program has an insertion of user input into OS command, the security policy must contain an explanation of how to do it safely. If the project can upload files (such as avatars) to a server, the security policy must enumerate possible security problems and how to deal with them.

Certainly, it's not an easy task to create and support such a manifesto. But expecting that each member of a team (including QA and support) remembers and sticks to every security practice he must to, is kind of naive. Moreover, there is a problem that for many vulnerabilities there are several ways to handle them. And if there is no definite policy on the matter, then it can occur that in some places developers use one practice (for example, they validate input information) and in other places they do something completely different (for example, they sanitize an output). Even if the code is good and pure, it's still inconsistent. And inconsistency is a perfect ground for bugs, support problems and false expectations.

For small commands with a constant technical leader code review may be enough to avoid aforementioned problems, even if there is no manifesto.

Summary:

- Working on security we should consider how our application is security sensitive. Bank applications and applications for sharing funny stories require different approaches.

- Working on security we should consider how harmful it will be for a user experience.

- Working on security we should consider how much it will complicate the code and make maintenance more difficult.

- Security mechanisms should be tested.

- It's advisable to teach team members how to deal with security issues and/or perform a thorough code review for every commit in a project.

- There are certain vulnerabilities that have to be eliminated for every application: XSS, XSRF, injections (including SQL injection), broken authentication, etc.